Difference between revisions of "Reuse tracking"

(→Proposal 3: Hosted Scraped Data Api) |

|||

| Line 109: | Line 109: | ||

This service would yield significantly better data than the first two | This service would yield significantly better data than the first two | ||

proposals, would be straight forward to set up, but would require | proposals, would be straight forward to set up, but would require | ||

| − | ongoing maintenance by the tech team. | + | ongoing maintenance by the tech team, and make Kinkade very grumpy. |

Revision as of 20:40, 9 August 2012

RDF metadata presents information about a work's ancestry in a machine readable way. The websites of users who properly used our license chooser tool already have this setup. While it is possible to trace backwards to find a derived work's source, it is impossible to trace forwards to find all of a source work's derivatives without the aid of extra infrastructure.

In this page, you will find proposals for several ethical (respects user's privacy, does not involve radio-tagging people with malware or drm) solutions to this problem. These may either be systems that Creative Commons would prototype with the intention of being a reference for other organizations to build their own infrastructure; or systems that we would build an maintain our self, and provide an api to interested parties (either free in the spirit of open, or for a small fee to help offset hosting costs). All of the proposed systems below have their own advantages and disadvantages; none of them the silver bullet.

Proposal 1: Independent Refback Tracking

When a user opens a webpage, the browser sends some information to the server. Of particular interest is the /referrer string/. To put it simply, the referrer string contains the URL of the webpage that linked the user to the page they are currently viewing.

The Refback Tracking framework would be hosted by respective content providers, and served independently from CC. This advantage means that once a working system is prototyped, it would have no hosting (potentially none). cost for us, and therefor require the minimal amount of maintenance.

The disadvantage to this approach is it is only able to trace direct remixes of a work, but not remixes of remixes.

Here is how it works:

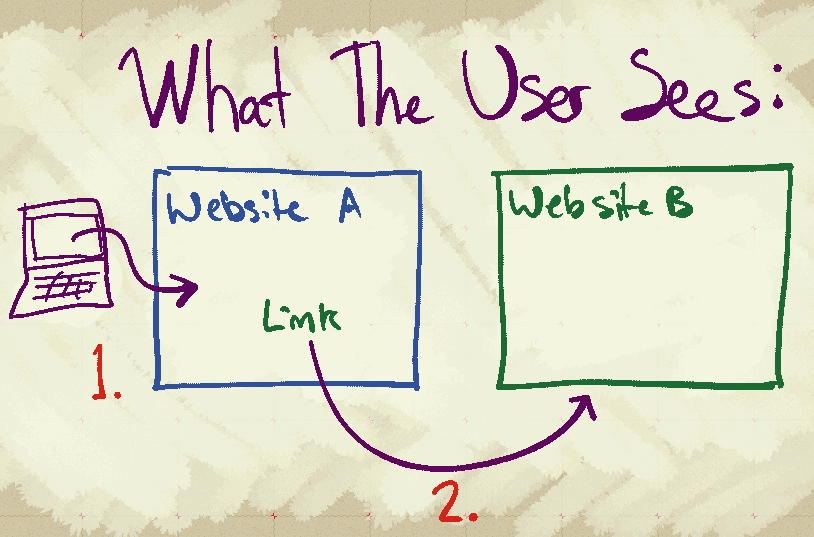

The above picture describes the sequence of events that triggers the tracking mechanism, shown from the user's perspective. The steps are like so:

1. The user opens Website A. Website A contains a remixed work. The work provides proper attribution to the work which it is derived from, both visually for the user and invisibly with metadata.

2. The curious user clicks on the link to the original work, and is taken to Website B as expected.

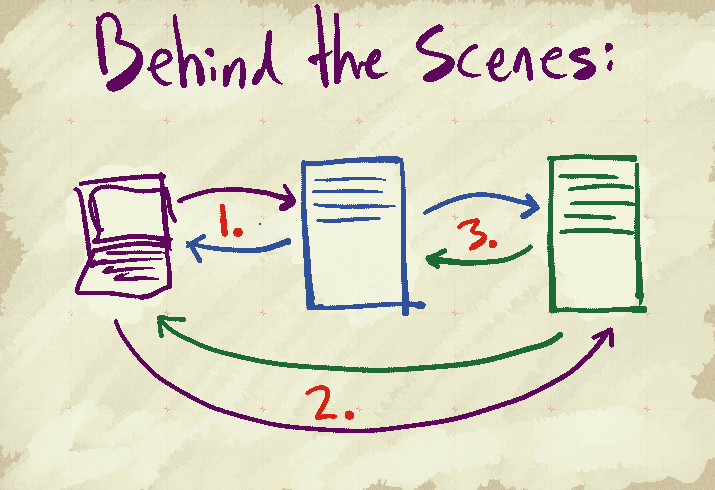

Here is what happens behind the scenes:

1. The user opens a website. The user's browser requests a page from a server. The website has a remixed work on it, and is attributed with metadata. The server replies to the user's request with the website.

2. The curious user clicks on the link to the original work. The user's browser sends a request to the server hosting the page of the original work. This request's referrer string contains the url of the webpage with the remixed work on it. The server replies to the user's request as expected, and takes note of the url in the referrer string (this can happen either using javascript embedded in the page, or with special code running on the webserver itself).

3. The server hosting the original work downloads the page of the remixed work (as noted from the referrer string). The server of the remixed work replies as expected. The server hosting the original work reads the metadata on the download page to verify that it indeed contains a remixed of the original work. The server notes the url in a database, to be used for generating reuse statistics.

Proposal 2: Hosted Refback Tracking

This approach is a variation of the first proposal, where CC runs an instance of the serverside component described in the first proposal. The advantage, is that if the service sees significant adoption, the aggregated data could be used to construct a better tree of derivative work. However, this advantage is only possible if use of this service is sufficiently widespread. Otherwise, this proposal has the same disadvantage as the first version, with the additional cost and liability to CC as it would lack the advantage to the first version.

Hosted Refback Tracking is a variation of Independent Refback Tracking, in which CC runs the server-side database component described in the first proposal, and the refback is sent to us via javascript embedded on the website hosting the original work.

This version requires that we host an maintain a special service, thus adding the disadvantages of both cost and liability. There are two advantages this version has over the first proposal. The fist advantage is that all that needs to be done to the website hosting the original content is to include a bit of javascript that we would provide (which is far easier than requiring the 3rd part to setup a nginx module, apache module, wsgi middleware, or roll their own backend). The other advantage is that if there is significant adoption, it might be possible to construct a better graph, as the data would be aggregated in one place.

Proposal 3: Hosted Scraped Data Api

Creative Commons already has two pieces of infrastructure that could be adapted to be used for reuse tracking. When a website uses the HTML provided by our license chooser to mark the page with attribution information and metadata, the badge icon is hosted by us as well. We are able to use the download statistics of these images to create an estimation of license usage in the wild, using the referrer string in requests for the image. We have a tool called Deedscraper which, when a deed is opened, javascript on the page sends the referrer string to the Deedscraper server, which reads the metadata on the referring page. This is used so that the deed can be safely updated after page load with information for attributing the linked work.

This version proposes that when we get the referring string from the download request for a license badge, deedscraper reads the metadata from that page, and takes note of it in a database. Thus, we can build a bi-directional graph of remixes. This data could be made available through a simple API or dashboard.

This service would yield significantly better data than the first two proposals, would be straight forward to set up, but would require ongoing maintenance by the tech team, and make Kinkade very grumpy.